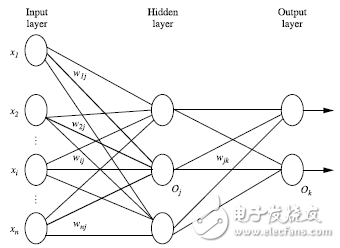

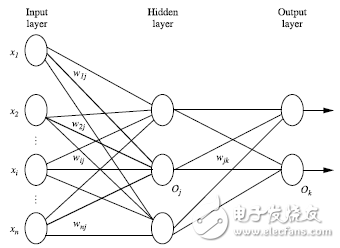

First, the multi-layer feedforward neural network. A multi-layer feedforward neural network is composed of three main components: an input layer, one or more hidden layers, and an output layer. Each layer consists of neurons or units that process information. The input layer receives the feature vector of the training examples. The weights between connected nodes are adjusted during training, and the output of one layer serves as the input for the next. The number of hidden layers can vary depending on the complexity of the problem, but typically there is only one input layer and one output layer. If there are n hidden layers, the network is referred to as an n+1 layer neural network. For example, a 2-layer neural network includes one hidden layer and one output layer. During training, each neuron performs a weighted sum of its inputs and applies a nonlinear activation function to produce an output. In theory, with enough hidden layers and a sufficiently large dataset, a neural network can approximate any continuous function.

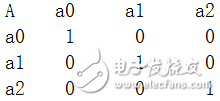

Second, designing the neural network structure. Before training a neural network, it's essential to define the architecture, including the number of layers and the number of neurons in each layer. To improve convergence speed, the input features are usually normalized to a range between 0 and 1. Discrete features can be encoded using one-hot encoding, where each possible value of a feature is represented by a separate neuron. For instance, if a feature has three possible values (a0, a1, a2), three input neurons are used. When the feature takes the value a0, the corresponding neuron is set to 1, while the others are set to 0. This process is repeated for all possible values. Neural networks can be used for both classification and regression tasks. For binary classification, a single output neuron can represent the two classes (e.g., 0 and 1). For multi-class classification, the number of output neurons matches the number of classes. There are no strict rules for determining the optimal number of hidden layers; it often depends on trial and error based on performance metrics like accuracy and error rates.

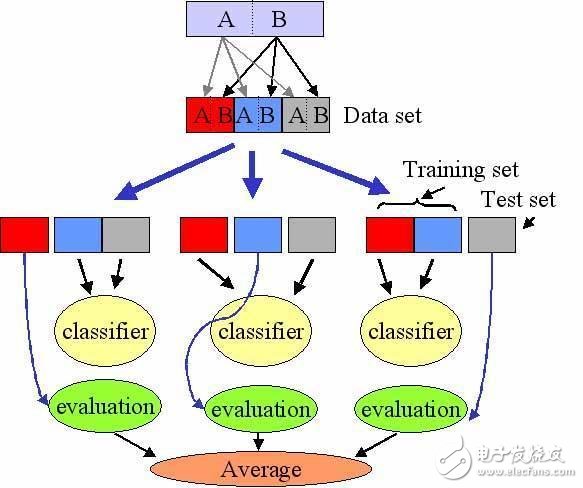

Third, the cross-validation method. Cross-validation is a widely used technique in machine learning to evaluate the performance of a model. Instead of splitting the data into just a training set and a test set, the data is divided into k parts, known as folds. In k-fold cross-validation, the model is trained k times, each time using a different fold as the test set and the remaining k-1 folds as the training set. After completing all k iterations, the average accuracy across all test sets is calculated. This approach provides a more reliable estimate of the model’s performance compared to a single train-test split. Cross-validation helps prevent overfitting and ensures that the model generalizes well to unseen data.

Fourth, the backpropagation algorithm. Backpropagation is a fundamental algorithm used to train neural networks. It works by iteratively adjusting the weights of the network to minimize the error between the predicted output and the actual target. The process involves two main steps: forward propagation and backward propagation. During forward propagation, the input is passed through the network to compute the output. Then, the error is calculated and propagated backward through the network to update the weights. This iterative process continues until the network achieves satisfactory performance.

4.1 Detailed description of the algorithm:

Input: a dataset, a learning rate, and a defined neural network architecture.

Output: a trained neural network.

Initialization: Weights and biases are randomly initialized within a specific range (e.g., -1 to 1). Each neuron has a bias term. For each training example X, the following steps are performed:

1. Forward propagation through the input layer.

2. Compute the activations for the hidden layer.

3. Compute the activations for the output layer.

The weighted sum of inputs for each neuron is calculated, followed by applying a nonlinear activation function. This transformation allows the network to learn complex patterns in the data. The formula for computing the output of a neuron is:

$$ O_j = f\left( \sum_{i} w_{ij} \cdot O_i + b_j \right) $$

Where $ O_j $ is the output of the current neuron, $ O_i $ is the output of the previous layer, $ w_{ij} $ is the weight connecting the two neurons, and $ b_j $ is the bias term. The function $ f $ represents the activation function, such as the sigmoid or ReLU function.

Rental LED Display

Rental LED display screens

LED technology is the latest and the best in terms of the audio visual technologies out there and it is still evolving. There is a strong emphasis in making LED displays energy efficient, cost efficient and light weight apart from other quality requirements. Hence, LED cabinets are also now expected to be lighter in weight. This need has resulted into the gradual shift from the iron cabinet, aluminum cabinet, to the cast-aluminum and die cast aluminum as the weight of the cabinet is lighter. Cast aluminum and die cast-aluminum cabinets are the latest of the cabinets used for LED casing.

The cast aluminum and die cast-aluminum cabinets have high strength and high tenacity. They are highly efficient, provide excellent heat dissipation, energy conservation and environmental protection. Because of their outdoor usage, they can be made as a waterproof cabinet of leasing cast aluminum. They are lightweight and ultrathin. They are quite flexible for easy packaging and lifting. They have a high refresh rate, high grayscale and are widely applied to the stage renting. They are easy and simple to install and thus help you save on precious time and man power as one-person can easily do the insallation. Cabinet splicing and wiring connection is fast and reliable. Not only great in looks dept. it can be easily dismantled. Casting Aluminum and die Casting Aluminum Cabinet also have better deformation resistance property.

Rental Led Display,Rental Led Screen,Led Video Wall,Led Screen Rental

Shenzhen Priva Tech Co., Ltd. , https://www.privaled.com