Reinforcement learning is increasingly popular in today's world. Let's take a look at the five things you have to know about reinforcement learning.

Reinforcement learning is one of the most popular research topics in today's society, and its popularity is increasing day by day. Let's study together five useful knowledge points on strengthening learning.

▌ 1. What exactly is reinforcement learning? How does it relate to machine learning technology?

Reinforcement learning is a branch of machine learning. Its principle is that in the interactive environment, agents use their own experience and feedback to learn through trial and error experience.

Supervised learning and reinforcement learning will clearly point out the mapping relationship between input and output, but the difference is that supervised learning feedback to the agent is the set of actions to perform the correct task, while reinforcement of the feedback of the learning will reward and punish Feedback on positive and negative signals.

For unsupervised learning, the goal of reinforcement learning becomes even more difficult to achieve. The goal of unsupervised learning is simply to find similarities and differences between the data, and the goal of reinforcement learning is to find a model that maximizes the cumulative reward of the agent.

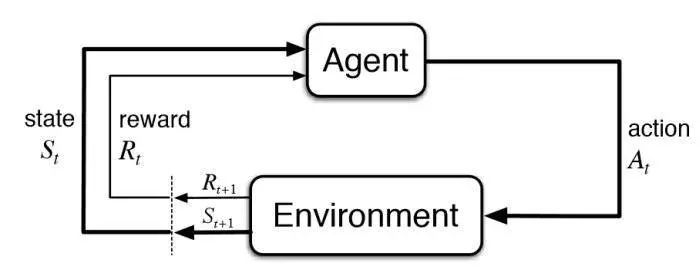

The basic ideas and elements involved in the reinforcement learning model are shown below:

▌ 2. How to determine a basic reinforcement learning problem?

Several key elements that describe the intensive learning problem are:

Environment: The physical world in which the agent is located;

Status: The current status of the agent;

Rewards: Feedback from the environment;

Scenario: A method of mapping agent status to actions;

Value: The agent performs an action in a specific state to obtain future rewards.

Some games can help us understand intensive learning problems well. Take the PacMan game as an example: In this game, the goal of PacMan is to try to eat more beans in the grid while avoiding encounters with ghosts. The grid world is the interactive environment of the agent. If PacMan eats the bean, he will receive the reward. If the ghost is killed (the game is over), he will be punished. In this game, "state" is PacMan's position in the grid, and the total reward is to win the game.

In order to get the optimal solution, the agent needs to explore new status while at the same time trying to get the most rewards. This is the so-called "balance between exploration and exploitation."

The Markov Decision Process (MDP) is a mathematical framework for all reinforcement learning environments. Almost all reinforcement learning problems can be modeled using MDP. An MDP process consists of a set of environments (S), each containing a possible set of actions (A), and a real-valued reward function R(s) and a transition matrix P(s',s|a). However, in the real world environment, the prior information of environmental dynamics may be unknown. In this case, using the "model-free RL" algorithm to predict is more convenient and easier to use.

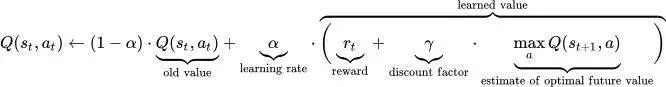

The Q-learning model is a widely used model that does not understand environmental reinforcement learning, so it can be used to simulate PacMan agents. The rule of the Q-learning model is to perform action a under state S and constantly update the Q value, and iteratively update the variable value algorithm is the core of the algorithm.

Figure 2: Reinforcement Learning Update Rule

This is a video of a PacMan game using a deep reinforcement learning:

Https://?v=QilHGSYbjDQ

▌ 3. What is the principle of the most commonly used deep learning algorithm?

Q-learning and SARSA are the two most common algorithms that do not understand environmental reinforcement learning. The exploration principles of the two are different, but the development principles are similar. Q-learning is an offline learning algorithm. The agent needs to learn the value of the behavior a* from another solution. SARSA is an online learning algorithm. The agent can learn value from the current behavior specified by the existing solution. . Both of these methods are easy to implement but lack generality because they cannot predict the value of an unknown state.

Some more advanced algorithms can overcome this problem, such as: Deep Q-Networks (the principle is to use neural network to estimate the Q value) algorithm, but DQN algorithm can only be applied in discrete low-dimensional action space; DDPG (depth determinism The Strategy Gradient Algorithm is an environment-conscious, online algorithm that is based on the Actor-Critic (AC) framework and can be used to solve deep reinforcement learning problems in continuous motion space.

▌ 4. What practical applications of reinforcement learning?

Because intensive learning requires a lot of data, it is best suited for analog data areas such as games and robots.

Reinforcement learning is widely used to design AI players in games. AlphaGo Zero defeated the world champion in traditional Chinese game Go. This is the first time AI defeated the real world champion. AI also has outstanding performance in games such as ATARI games and backgammon.

In robotics and industrial automation, deep learning is also widely used. Robots can build an efficient adaptive control system for themselves to learn their own experiences and behaviors. DeepMind's "depth reinforcement learning with robotic manipulation with asynchronous policy updates" is a good example.

Watch this funny demo video video(https://?v=ZhsEKTo7V04&t=48s)

Other applications of reinforcement learning include: a text-synthesizing engine, a dialogue (text, speech) agent that learns from user interactions and improves over time, an optimal treatment policy in the healthcare field, and an online stock trading agency based on reinforcement learning.

▌ 5. How can I start intensive learning?

Readers can learn more about the basic concepts of reinforcement learning from the following links:

"Reinforcement Learning-An Introduction" - This book was written by Richard Sutton, father of reinforcement learning, and his doctoral supervisor, Andrew Barto. The electronic version of the book is found at http://incompleteideas.net/book/the-book-2nd.html.

The Teaching material video course offered by David Silver provides readers with a good understanding of the basics of reinforcement learning: http://Teaching.html

Pieter Abbeel and John Schulman’s video technical tutoria is also a good study material: http://people.eecs.berkeley.edu/~pabbeel/nips-tutorial-policy-optimization-Schulman-Abbeel.pdf

Start building and testing the RL agent

If you want to start learning to build and test reinforcement learning agents, Andrej Karpathy's blog This blog details how to use the original pixel strategy gradient to train the neural network ATARI Pong Agent and provide 130 lines of Python code to help you Build your first reinforcement learning agent: http://karpathy.github.io/2016/05/31/rl/

DeepMind Lab is an open source 3D game-like platform that provides a rich simulation environment for artificial intelligence research that requires opportunities.

Project Malmo is another online platform that provides basic AI research: https://

OpenAI gym is a toolkit for building and comparing reinforcement learning algorithms: https://gym.openai.com/

The RIMA deep cycle batteries including UND series of AGM deep cycle batteries, UNG series of GEL Batteries, deep cycle batteries and Tubular Batteries, provide enhanced performance and excellent reliability in long duration cycling applications. The high density active materials plates and specialized paste formulation of the deep cycle battery provide a longer service cycle life without shortening the life of the battery.

General Future:

5-20 years design life(25℃)

Non-spillable construction

Sealed and maintenance-free

Excellent recovery from deep discharge

High density active materials plates

Longer Life and low self-discharge design

Standards:

Compliance with IEC, BS, JIS and EU standards.

UL, CE Certified

ISO45001,ISO 9001 and ISO 14001 certified production facilities

Application:

Solar and wind power system

Communication systems

Uninterruptible power supplies

Golf cars and buggies

Alarm and security system

Electric toy and wheelchairs, etc.

Deep Cycle Agm Battery,Agm Battery Deep Cycle,Solar Battery Deep Cycle,Deep Cycle Solar Battery

OREMA POWER CO., LTD. , https://www.oremabattery.com