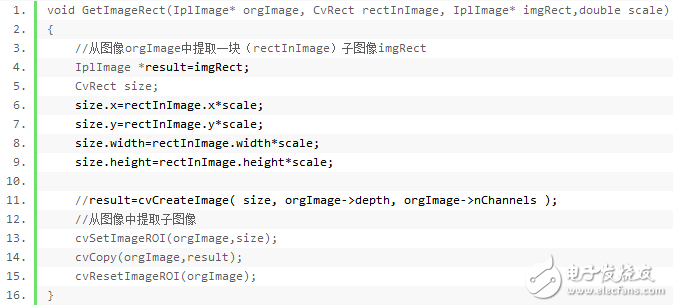

After the face test discussed earlier, the face is extracted and saved for training or recognition. The following code demonstrates how to extract a face:

Face Preprocessing

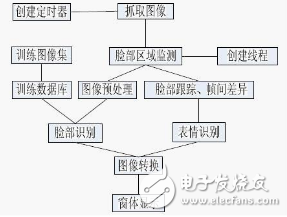

Once you have a face image, it’s tempting to use it directly for recognition. However, doing so without proper preprocessing can lead to a significant drop in accuracy—up to 10% or more. This is because real-world images often contain variations in lighting, pose, expression, and background that can confuse the system.

In a robust face recognition system, preprocessing plays a crucial role. It helps standardize input images, making them more consistent and easier for the algorithm to process. For example, if your training data was captured in low light, the system might fail to recognize faces under bright conditions. This issue is known as "illumination dependency." Other factors like facial position, size, rotation, hair, accessories, expressions, and lighting direction also affect performance.

To address these challenges, preprocessing techniques are applied. These include converting color images to grayscale, normalizing brightness and contrast using methods like Histogram Equalization, and masking out unnecessary areas (such as hair or background) to focus on the face region. More advanced techniques may involve edge enhancement, contour detection, or even motion analysis for video-based systems.

PCA Principle

Once the face image is preprocessed, Principal Component Analysis (PCA) can be used for face recognition. OpenCV provides the `cvEigenDecomposite()` function to perform PCA, but it requires a training dataset to learn how to distinguish between different faces.

For example, if you want to recognize 10 people, you would collect around 20 preprocessed face images per person, resulting in 200 total images of the same size (e.g., 100x100 pixels). These images are then used to build a model that captures the main variations among them.

The idea behind PCA is to find the most significant patterns or "eigenfaces" that describe the differences between the training images. First, an average face is computed by averaging all pixel values. Then, the algorithm identifies the principal components—these are the eigenfaces that represent the largest variance in the dataset.

The first few eigenfaces capture the most important features, such as eye placement, nose shape, and mouth structure. Later eigenfaces tend to represent noise or minor variations. Typically, only the top 30–50 eigenfaces are used to maintain accuracy while reducing computational complexity.

A single face image can be represented as a linear combination of the average face and the eigenfaces. For instance:

(Average Face) + (13.5% of Eigenface 0) – (34.3% of Eigenface 1) + (4.7% of Eigenface 2) + ...

This gives a set of coefficients that uniquely identify the face. During recognition, a new image is transformed into this feature space, and the closest match is found by comparing its coefficients with those in the database.

Training Data

Creating a face recognition database involves organizing a list of images along with their corresponding labels. This is typically done using a text file, such as `trainingphoto.txt`, which maps each image to a person’s name. For example:

Joke1.jpg Joke2.jpg Joke3.jpg Joke4.jpg Lily1.jpg Lily2.jpg Lily3.jpg Lily4.jpg

This tells the program that "Joke" has four images, and "Lily" has another four. The function `loadFaceImgArray()` can then load these images into an array for processing.

To generate the PCA model, OpenCV provides functions like `cvCalcEigenObjects()` and `cvEigenDecomposite()`. Here's a basic overview of `cvCalcEigenObjects`:

void cvCalcEigenObjects( int nObjects, void* input, void* output, int ioFlags, int ioBufSize, void* userData, CvTermCriteria* calcLimit, IplImage* avg, float* eigVals )

- nObjects: Number of training images. - input: Input image data. - output: Output eigenfaces. - avg: The average face image. - eigVals: Array of eigenvalues representing the importance of each eigenface.

These functions help build a compact representation of the face space, enabling efficient and accurate recognition. With proper preprocessing and training, the system can reliably identify individuals even under varying conditions.

extinguishing cover,electrical systems

Yixing Guangming Special Ceramics Co.,Ltd , https://www.yxgmtc.com